May 11, 2023

by Sanji Bhal, Director, Marketing & Communications, ACD/Labs

The goal for data digitalization and data management projects is to leverage organizational knowledge to innovate and commercialize faster. The variety and volume of scientific data, however, makes it challenging. Every data type raises its own difficulties and analytical data is no exception. We brought together three experts to discuss key pain points and their experience in creating global analytical knowledge management solutions, in a webinar. Read the highlights of their discussion here.

Director, Strategic Partnerships, ACD/Labs

Global Product Manager, Analytical Labs, Solvay

Director, Structural Chemistry, Oncology R&D, AstraZeneca

How did you become involved in analytical data management?

Nichola: I represent the very early research functions at AstraZeneca—medicinal chemistry groups who are generating thousands of samples to test and select candidates. We wanted a centralized, cloud-based solution that would make analytical data accessible to all functions within the organization and that is how I got involved in the Global Analytical Database project.

Mark: I started down this digital journey as a lab manager, managing a team and dealing with chromatography and mass spectrometry, with purely selfish goals: to make my own job easier and improve my team’s efficiency. Once I made a start, I discovered there was so much more we could be doing with data.

Graham: I’ve been involved with numerous analytical data management projects for our customers. We see many commonalities in the challenges they face and their approaches to address them. The experience of the ACD/Labs team means we can provide an informed perspective to help organizational leaders attain their data management goals.

Analytical Data—An Enterprise Asset

Nichola: There needs to be a real transition to move away from thinking of data as a single use piece of information that’s consumed and then no longer usable. The data in itself can be an incredibly valuable resource. Now with the potential for AI and machine learning to derive further insight from that data, it’s really important to think with that mind set. To think of a data management strategy that helps you to realize the benefits.

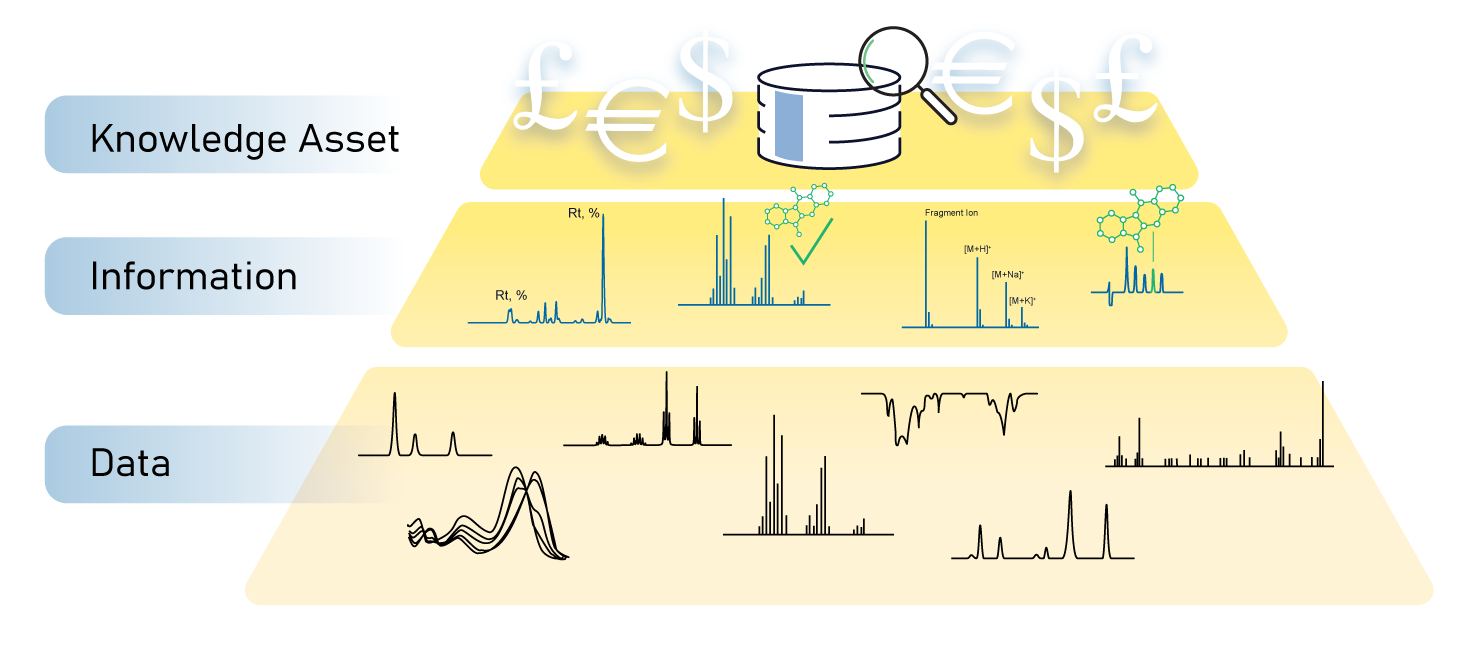

Graham: Years ago, data use was by humans, but there is increasing demand for data to be used by machines and the interaction of humans and machines. The paradigm shift in the last decade has been that more and more organizations are viewing data as an asset that has value. Not just in solving immediate scientific problems, but that the data can lead to financial benefit for the organization.

In today’s R&D organizations, people are trying to interact with each other around data. That change is happening and it depends on a data lifecycle that involves taking original data and converting it into information and then being able to use that in ways that generate value. There is increasing awareness that there needs to be investment in data management technologies and I think that this goes hand in hand with the recognition that data has value. As tough as the pandemic was, I think it served to heighten awareness that data and data management are critically important to the success and health of organizations.

The Data Access Challenge

Graham: Data accessibility issues exist with current technologies in a variety of ways but consistently across R&D, data is siloed in one way or another. It’s either frozen into a location, a document format, or some image format. I’m sure there is some data that is still not digitalized. It’s in notebooks which may not be electronic, or instrument records which are not fully digitalized.

“Finding data within a lab or a site is easier than in the organization as a whole. When you can’t find data, it doesn’t exist.” – Mark Kwasnik

Nichola: I can find my own data readily, but if I want to be able to search pools of data to look for trends, or gain insights, or even access data from other company functions or geographies, that’s a real challenge. We use a lot of external contract research organizations and accessing the data they generate is even more difficult.

Mark: I agree, finding data within a lab or a site is easier than in the organization as a whole. It’s easier for scientists to re-prep and re-run a sample than to search through forty computers and find existing data. Solvay is a global organization with sites all around the world. Even if the data at your particular site is structured and easy to use, that doesn’t mean you could reach across the continental divide and understand someone else’s data or data structure, even if they’re running the same methods and analyzing the same samples. And when you can’t find data, it doesn’t exist.

Why Analytical Data Heterogeneity Is Here to Stay

Graham: One of the findings of a recent analytical data management survey we carried out was that data heterogeneity is a key challenge. Scientists are using more than one analytical technique, using perhaps two or more instruments, followed by two more software applications for data analysis, and then trying to bring that all together. 90% of the people that we surveyed were dealing with diverse types of data.

The Legacy Instrument Reality

Mark: At our global R&I centers, within Solvay, not only do we have a whole plethora of techniques to be able to serve our customers, but even within the techniques you will see a handful of different instruments. If you pick a single technique, like gas chromatography, in a newly built lab, you’ll find the same vendors and software—it’s nice and homogenized. But not every lab is new.

Existing labs have legacy equipment from different vendors, or even from the same vendor but different software platforms. Not only does this make it more difficult to run the lab itself, because the team must learn how to maintain, run, and operate all these different GCs and software platforms (from a scientist’s perspective, it’s challenging); putting on my data hat, when you want to come ingest and process the data, vendors tend to do things slightly differently.

To me as a scientist a chromatogram is a chromatogram, but to me as a data guy a chromatogram from seven different vendors is seven different “techniques”. Having said that, it is difficult to justify a large capital investment in hardware or software without new capabilities, just for the sake of standardization.

“To me as a scientist a chromatogram is a chromatogram, but to me as a data guy a chromatogram from seven different vendors is seven different ‘techniques’.” – Mark Kwasnik

Nichola: I’ve seen it from both sides—as a scientist and a manager. There are lots of reasons for the choices that you make in the lab environment. Sometimes it’s about familiarity, sometimes it’s to do with the support platforms you have in place. There’s a big overhead to keeping large analytical facilities running.

Scientific Freedom to Choose the Best Tool for the Job

Nichola: We consistently need to use at least two different types of data to characterize and confirm our structures and purities, to meet testing requirements. I agree that there is some merit in trying to consolidate to fewer vendor platforms, but as we’re looking to drug more and more challenging targets with increasing molecular complexity in Pharma, we want to be able to choose the best instrument for addressing our analytical needs. Sometimes you need a little heterogeneity.

“We want to be able to choose the best instrument for addressing our analytical needs.” – Nichola Davies

Mark: Agreed, as a purely data guy harmonization of instruments and data is great, but as a scientist I don’t want IT dictating what equipment and techniques I have access to. I want the freedom and flexibility to pick the best tool on the market.

Mergers and Acquisitions

Graham: With mergers and acquisitions in the pharma industry, we’ve seen that when two large companies merge, who individually have done great work on their tech standardization, considerable additional effort is needed in managing instrument data.

Nicola: Yes, absolutely. We see that in mergers of large organizations, each company tends to have their preferred solution. That’s quite a big overhead for IT governance.

The Path to Effective Analytical Data Management

What data should be managed? All data, curated data, or just interpreted results?

Nichola: My preference would be all data. We don’t know what the future will hold yet in terms of how we’re going to be able to use data. If we’re not capturing it now, organizing it, tagging it appropriately with metadata, then we’re preventing that future use.

Mark: I agree, all data if it’s tagged properly. From an IT perspective all data is quite an overhead—data storage and architecture are expensive; but so is generating precious samples, using the hazardous materials and the time to prep them, using an expensive high resolution mass spectrometer or NMR to generate the data and the analysts time to process it. Those also have a fixed cost associated with them.

Graham: Curated data is absolutely essential to proper data science and reference data also needs to be properly curated to be reliable in an organization. Tagging data to be able to understand it and access curated packets is a must.

Data Needs Context to Be Reusable

Mark: Even if I have beautifully digitized data, and access to the processed and raw data files, without context it means nothing to anyone else in the organization. You need instrument metadata associated with it, and the analytical testing data. A chromatogram is great but if I don’t know for GC, for example, if a 5 or 60 meter column was used, or at what temperature, it’s essentially useless. It’s just taking up digital hard drive space.

“Just because data is digital, doesn’t mean you can use it again…without context it means nothing to anyone else in the organization.” – Mark Kwasnik

Graham: That must be part of the strategy—to determine what context is necessary. It’s great to have a vocabulary around metadata, for example, but you need to bring the right stakeholders together to ensure that the vocabulary captures the important information about sample preparation, methods, and equipment.

Mark: Context requirements may be very different, depending on where and how the data will be used. People generating data want very different metadata than internal or external customers submitting samples. The big dream for everyone is AI and machine learning and those have very different data needs to make sense and use of gigantic datasets. Different users need slightly different things tied to the data to be able to leverage it. Just because data is digital, doesn’t mean you can use it again. You have to lay the foundations before you start building your house.

Bring Together the Right Stakeholders

Graham: At the outset of projects with customers we go through “define and design.” We sit down with a variety of stakeholders and example data to talk through what they want from it. We find those are very useful for organizations, strategically.

Nichola: It’s critical to involve the data scientists right at the beginning of developing your analytical data management strategy because if you aren’t capturing their needs at that point, it’s really difficult to build that in later.

Get Commitment

Nichola: I probably underestimated the amount of time I needed to dedicate to this project. There are many other pulls on my time. If you’re embarking on a big project like this, making sure you’ve got that buy-in from management to dedicate time towards supporting this type of activity is essential. Commitment across the board is essential. You have to have top-down support from management, a strategy, and the funding to implement it, but you need buy-in from middle management and those in the labs doing the legwork, because they already have a lot on their plate, and you are now adding more.

Mark: Absolutely, you can’t expect someone already working at 100% to put in extra time because you want data tagged. Everyone needs to know the benefit to them and to the organization.

Automate, Automate, Automate

Mark: If I’m going to ask scientists to fill in 5-10 metadata fields to make the data they’re capturing better usable in the future, I will try to include automation along the process to save them time elsewhere. Perhaps by-passing manual data entry or eliminating manual report creation.

Think More Broadly About Harmonization

Mark: It’s important to remember that even if two labs are doing the same work, they might not really be the same. Labs in two different countries might have different languages, or different date formats, or commas versus periods. Harmonizing on these little things makes a big difference. It may not be your job as the overarching person deploying the strategy, but you need to get the scientists in the different labs together to figure out what works best for them.

Closing Thoughts

Nichola: Within the drug discovery and development process, the initial synthesis of drug product happens several years before it transitions into the development environment. In that transition from discovery to development, I’ve traditionally seen a lot of re-work. Development receives the compound and they regenerate all the analytical data again—they redevelop methods, reassign NMR spectra— which is terrible duplication of effort. Finding mechanisms to easily share our learnings later in the R&D process helps to streamline and facilitate our end goal—to deliver safe and effective therapies to patients, faster.

Mark: Effective analytical data management makes labs more efficient. In a global organization you have multiple sites working on the same thing. Being able to exchange information means you don’t have to redevelop methods or rerun samples. Labs can generate data faster, the plants can produce faster, research and innovation can do their job faster. Even if an experiment was a failure and didn’t meet a range of specifications for this application today, it might be exactly what you need 6–9 months later for a different request. Not having to start from scratch and being able to use that as a starting point accelerates the innovation pipeline.

Watch the webinar recording “How to Overcome the Challenges of Analytical Data Management” for more details, including a short discussion from Nichola about AstraZeneca’s Global Analytical Database, or read the article published by The Analytical Scientist “Demystifying Analytical Data Management”.